Introducing LLMQuery Framework: Scaling GenAI Automation with Prompt Templates

Large Language Models have completely changed how we automate, create, and solve problems.

Integrating and experimenting these models with different providers, along with optimizing LLM Prompts and benchmarking them with available providers can feel overwhelming. That’s why I’m excited to introduce llmquery, a project I’ve started this year to make interacting with Large Language Models and prototyping new GenAI-powered applications simpler, faster, and more scalable.

Llmquery is all about making it easier to prototype new applications and use cases. It just starts with writing a single YAML template (or using one of the built-in YAML templates available) where the YAML template handles all the overhead of integrating with commercial and local providers, helping with validating models, calculating tokens, setting limits, rendering templates, and other checks.

Imagine this: I want to scale LLMQuery to be a singular framework for engineering teams to prototype applications. Every new application, SOP, or process can be simplified in a YAML template, and you can reference a template ID to execute a template with all validations out-of-the-box. I see this as writing 1 YAML template being equivalent to developing 1 AI Application use case.

For example, let’s say you would like to build a Pull Request Code Review AI application. You can use the built-in YAML template (pr-review-feedback) to get constructive feedback about your Pull Request in a parsable format (eg. JSON).

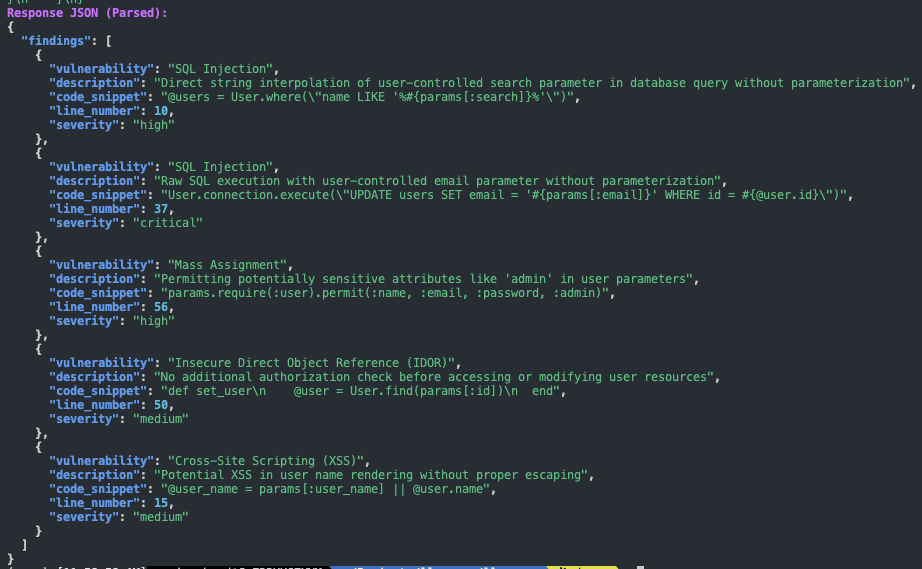

Another use case I automated is running SAST security scanning with AI through LLMQuery. The template is called detect-security-vulnerabilities1 and you can find it in the templates library.

Here is how the results look like:

Why I Built llmquery #

As an engineer who always experiments new automation approaches, whenever I use LLMs in my projects, I run into the same problems over and over: managing prompts, dealing with different providers, hitting token limits, and getting inconsistent outputs. I also had to repeat myself on every experiment, where I would rewrite the integration and tests again. I wanted a solution that could handle all these issues without adding extra overhead. That’s how llmquery came to reality.

llmquery can do:

- Works with Multiple Providers: OpenAI, Anthropic, Google Gemini, and Ollama are all supported.

- Has extensible built-in templates: llmquery comes with a templates library of well-tested production LLM Prompts that can be integrated for various use-cases, including Application Security, AI Security, Code Quality, and general use cases.

- Dynamic YAML Templates: You can create prompts once and reuse them for different tasks by just swapping out variables.

- Built-In Validation: It sets client-side token limits, preventing limits and cost errors, and catches other common issues upfront.

- Developer-Friendly: Whether you prefer Python or a CLI, llmquery fits into your workflow.

How It Works #

At its core, llmquery uses YAML templates to define prompts. These templates make it easy to customize queries while keeping them organized and reusable.

code = """

def index

@users = User.where("name LIKE '%#{params[:search]}%'") if params[:search].present?

@users ||= User.all

end

"""

query = LLMQuery(

provider="ANTHROPIC",

templates_path=llmquery.TEMPLATES_PATH,

template_id="detect-security-vulnerabilities"

variables={"code": code},

anthropic_api_key="your-api-key",

model="claude-3-5-sonnet-latest"

)

print(query.Query())

You can view the template from here: llmquery-templates library

The API documentation is available here: api-reference.md

What Can You Use llmquery For? #

There are so many ways to use llmquery, but here are a few examples to get you started:

- Code Analysis: Automate security reviews or find bugs with structured prompts.

- Security Scanning: Use LLMQuery’s templates to scan for security vulnerabilities, identify code secrets, and classify PII data in unstructured formats.

- Data Analysis: Build automation and workflows for analyzing data with LLMs, and experiment results within different providers and models.

- AI Security: Use available templates to build security defenses against AI attacks, such as Prompt Injection.

- PR Summaries: Generate quick, clear summaries for pull request changes.

- Content Creation: Write marketing copy, technical documentation, or anything else you need.

- Translations: Build workflows that support multiple languages with ease.

Let’s Build Together! #

llmquery is open source. If you’ve got ideas for new features, templates, or providers, I’d love to hear from you. Check out the GitHub repository to contribute or give feedback.

This is just the beginning. I’m looking forward to seeing how you use llmquery to build amazing things. Let’s make working with LLMs simpler and more accessible for everyone.

Github Repository: github.com/mazen160/llmquery #

Best Regards,

Mazin Ahmed